The Monthly Salon: November

Are you worried about AI doom?

This is the monthly community Salon at New World Same Humans, a newsletter on trends, technology, and society by David Mattin.

If you’re reading this and haven’t yet subscribed, join 25,000+ curious souls on a journey to build a better future 🚀🔮

When I rebooted the newsletter last month after a brief pause, I promised to start a Monthly Salon. Here is the first!

Think of these Salons as the online equivalent of a 17th-century London coffee house. A space for lively and energising discussion; a room open to those in the know, where you can eavesdrop on a fascinating debate or start one yourself.

That will all happen in the comment threads; so to contribute click through to the article page on the web — just hit the headline at the top of the email — scroll down, and dive in.

I’ll play an active role in the discussions, of course, and attempt to answer every question aimed at me. So ask away!

Feel free to take the conversation in any direction you see fit under the broad banner that guides this newsletter: trends, technology, and our shared future.

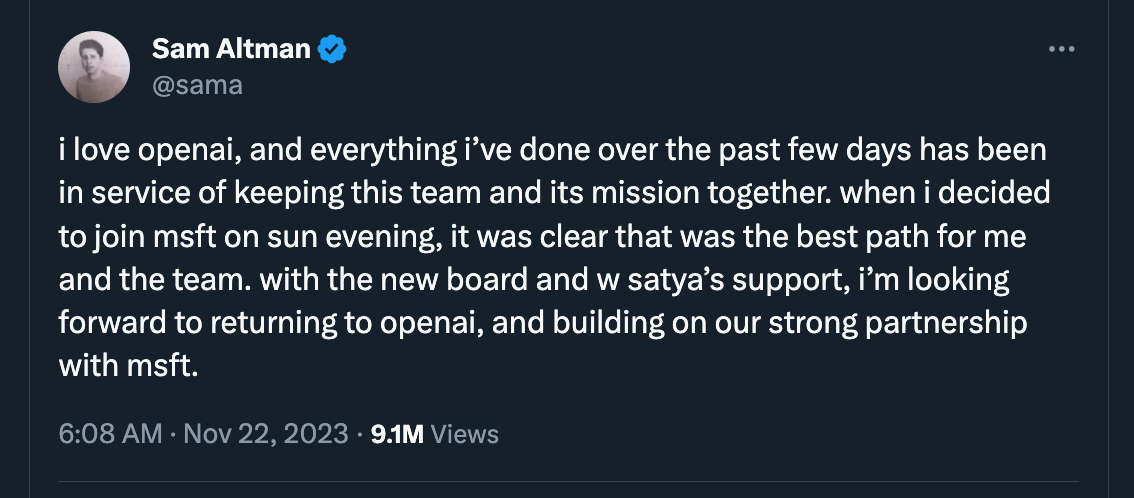

But each month I’ll throw out a thought starter to get us talking. This morning came news that Sam Altman is back as CEO of OpenAI:

It’s been a rollercoaster five days.

It’s still not clear what led the OpenAI board to move against Altman. But rumours abound that this was about a split between those inside the company worried about existential risks to humanity posed by machine intelligence, and those less worried. Between those who want to slow down and those — with Altman among them — keen to speed up.

We’re all hearing a great deal, right now, about the possibility that we’ll lose control of superintelligent AI. That it might radically destabilise our societies, or even wipe out human beings. This is the so-called AI doom scenario.

This month I want to ask: what do you think of all this talk about AI doom? Are you worried? If so, what form does that worry take? Or do you have smaller-scale but still significant concerns about AI? Perhaps you’re worried about its impact on your career, or what it means for the future your children will inhabit?

Or do you think these concerns are overblown? Is there a risk we overreact, and slow progress on what could be the most powerful technology for good we’ve ever devised?

Wherever you stand, dive into the comments and share! I’ll discuss my thinking on all this in the comments, too.

Remember, this monthly theme is only a conversation starter: feel free to take the conversation where you like.

And with that, over to you.

Welcome to the Salon; let's talk!

As for my views on all this, I think talk of AI doom is one part of a larger cultural phenomenon right now; one I'm thinking a lot about.

It seems to be that we're ever-more caught, as a culture, between stories of tech-fuelled transcension of all human limits, and stories of collapse. Between people who believe we'll soon be able to command all knowledge, conquer death, become infinite and all-knowing, fly to Mars, and those who believe that we're on the eve of collapse into some form of post-civilisational afterworld.

I want to understand more about what's driving those stories. And where they're leading us. I think that at the root of this 'transcension vs collapse' narrative is a loss of faith in our collective agency; in the ability of all of us to come together and shape our shared future. We're no longer telling ourselves stories about the brighter futures that could come to be if we build them. And in the void left by this loss of faith all we're left with are the transcension narratives of the techno-optimists, and the other side of that coin: the collapse narratives of those who believe tech is going to doom us all.

I do also feel there is not enough regulatory oversight here and, even worse, too much profit focus on this topic. This is not any new technology but one that could well be desastrous to the world. And this is not meant in a doomsday reflex. I refer to the problem that has hit the world with social media and all it's dangerous effect we start to only understand the past years. No regulation, no oversight and look at what it has done. Not a lot of good to western democracies!

AI has even greater potential to do harm to our societies and that is well before a singularity event happens and "Skynet" bombs humanity off the planet.