Welcome to the mid-week update from New World Same Humans, a newsletter on trends, technology, and society by David Mattin.

If you’re reading this and haven’t yet subscribed, join 24,000+ curious souls on a journey to build a better future 🚀🔮

To Begin

I put the newsletter on pause last week; did I miss anything?

Given what is unfolding right now, it’s hard to make this newsletter anything other than a generative AI revolution update. I don’t want to stoke the hype yet further, but I’ve never seen anything quite like this.

Given all that, this week we’ll dive into a high-profile petition to pause work on new generative models. Also, we’ll look at the new hyperreality taking shape around us via Midjourney and its community of inventive users.

But it’s not all AI; there’s also an intriguing new report on global population change from the Club of Rome.

Let’s get into it.

🤖 For the people

This week, another generative AI story that pushes 2023 deeper into the realms of what seemed, recently, possible only in science-fiction.

It’s not yet another platform, plugin, or viral image (more on those below) but a call to slow down. Over 1,000 technology leaders signed a petition demanding a pause of at least six months on the training of AI systems more powerful than GPT-4.

Signatories included Elon Musk, Yuval Noah Harari, Stability AI’s Emad Mostaque, and Apple co-founder Steve Wozniak. And their language was pretty apocalyptic:

According to the authors of the petition: ‘recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.’

The scale of their concern was lent support by a research paper published last week. It saw Microsoft researchers report that GPT-4 shows ‘sparks of AGI’. The model, they point out, shows high-level competence across mathematics, coding, vision, medicine, law, and psychology, and can solve novel problems in those domains without any need for special instructions: ‘in all of these tasks, GPT-4's performance is strikingly close to human-level performance’.

Meanwhile, the iconic and ever-controversial AI safety expert Eliezer Yudkowsky went full pelt in Time magazine. He didn’t sign the petition, he says, because it doesn’t go far enough:

All training of large models, says Yudkowsky, needs to be shutdown indefinitely and worldwide. He says the governments of Earth must come together in a concerted effort to stop an AI-fuelled human extinction.

⚡ NWSH Take: Pretty intense, right? Yudkowsky is, and has long been, an outlier on all this. Meanwhile, others say this week’s petition signatories have fallen prey to OpenAI’s apocalypse marketing: a plan to get everyone scared and then sell subscriptions. // For my part, I don’t think AI annihilation is imminent; nor do I think these fears are founded only in hype. GPT-4’s competence across all kinds of reasoning tasks is insane. And for all the reams of coverage (guilty), I don’t think we’re anywhere near processing the implications. It no longer seems far-fetched that an AI model could start behaving in strange and uncontrollable ways in the near-term. It’s emphatically time to get serious about alignment. // Alignment is first a technical problem: how do we make sure AIs only do what we want them to do? After this, though, it becomes a political problem. Whose values should we align our AIs with? Those of Californian tech bros? // We can’t put the AI genie back in the bottle, and in practice a global ‘pause’ is highly unlikely. That means only answer here is to speed up research on the technical challenge of alignment, and to allow a plurality of AIs, empowering different peoples and communities to live and create according to their own value systems. That is real alignment. To that end, check out open source AI group LAION’s petition for a new internationally-funded supercomputer to train open source foundation models.

📈 Growth mindset

Also this week, a huge if true forecast on the future of the human population.

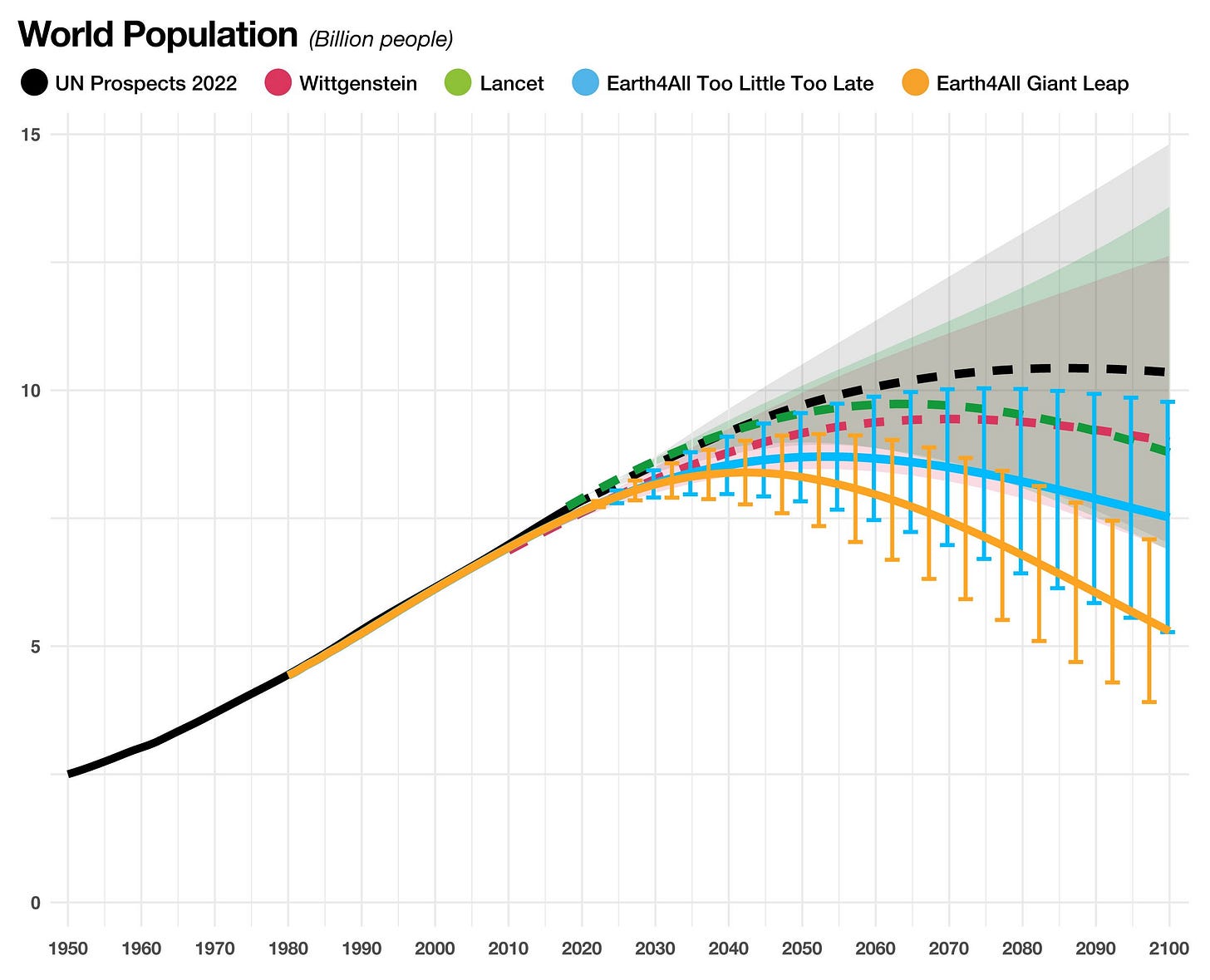

A new study commissioned by the Club of Rome forecasts that if current trends continue then the global population will hit 8.8 billion in around 2050, before declining rapidly to 7.8 billion by the end of the century.

The study, conducted by think tank Earth4All, also games out a scenario in which governments invest in policies known to curtail population growth, such as education and social services. Here, population peaks at 8.5 billion in around 2040 and falls to 6 billion by 2100.

Both projections are far below last year’s UN Population Prospects forecast, which had population peaking at 10.4 billion in the 2080s.

The Club of Rome is best known for the now (in)famous 1972 report The Limits to Growth, which warned of impending environmental crisis and social breakdown due, in part, to strains imposed by overpopulation.

The report came amid a wave of neo-Malthusian anxiety in the decades after WWII. A 1968 book called The Population Bomb — which influenced the thinking of the Club of Rome — raised the spectre of hundreds of millions of people starving to death as population growth exceeded food supply.

⚡ NWSH Take: The original Limits to Growth report is today the subject of fierce disagreement. Critics say the Club gave voice to unfounded fears motivated by an ideological distaste for modernity. Proponents point out that the report offered a number of different scenarios, and that the growth-induced systemic breakdown it envisioned may yet eventuate. // This new statement on population could end up being just as contested. The Club now accept that their population bomb won’t go off. And they celebrate their finding that population is set to peak sooner and lower than the UN expect — stressing that it’s good news for the environment. Meanwhile, though, a niche but growing school of thought says that population collapse is the real crisis coming down the track; rapidly shrinking and ageing populations, runs this line, will kill productivity and threaten economic collapse. // Where does the truth lie? Most mainstream demographers say population collapse isn’t on the cards, and that ageing populations don’t have to mean economic calamity. Meanwhile, it’s not overpopulation but intense patterns of high and damaging consumption in the rich world that are the primary drivers of climate change. As ever with demography, it seems the truth lies between the extremes.

🎭 Real life

Version five of the text-to-image tool Midjourney was released two weeks ago. And this week, users went wild.

On Reddit, Midjourney enthusiasts started sharing photorealistic, news report style images of historical events — such as 2001’s devastating Great Cascadia earthquake in Oregon:

The truth, of course, is that no such event took place; this is all fictitious — a AI-fuelled experiment in alternative history.

Meanwhile, Chinese users of the tool are creating pseudo-documentary images of the southwestern city of Chongqing in the 1990s.

All this comes days after the first truly viral AI-generated image: of Pope Francis in a white puffer coat.

⚡ NWSH Take: In his 1981 book Simulacra and Simulation, the French philosopher Jean Baudrillard wrote about hyperreality: the emergence of a media environment in which the boundaries between the real and our representations of the real become ever-more blurred. Digital media massively amplified that phenomenon. All of us recognise the feeling, today, of living inside a tech-fuelled hall of mirrors in which the difference between image and reality is hard to discern, or even meaningless. // What can be said? That was before this generative AI revolution and tools such as Midjourney, which are now achieving photorealism that is impossible to distinguish from the real thing. These AI-generated pseudo-photos are perfect representations of representations; signs that point only to other signs — exactly the phenomenon that Baudrillard put at the heart of his theory. They make possible a whole new level of alternate history; a convincing mass media documentation of events that never took place. There’s going to be so, so much more of this.

🗓️ Also this week

🐰 This Twitter user made a AI-fuelled virtual companion by hooking ChatGPT to a cute but grumpy holographic rabbit avatar. It’s just one signal of how the generative AI revolution will unleash a tsunami of virtual companions; the rise of this trend is a longstanding NWSH obsession.

🧠 Direct brain interface startup Neuralink is searching for a partner to help it run clinical trials on humans. In 2022 the FDA rejected Neuralink’s application to start human trials; the company has since been working to address the safety concerns that were raised.

🛩 A Swiss startup is working on a hydrogen-powered jet that it says will cut flights from Europe to Australia to four hours. Destinus has been testing prototypes for two years, and is now partnering with Spain’s Ministry of Science. It currently takes around 20 hours to fly from Europe to Australia.

👨💻 A new report says ChatGPT could impact 300 million full-time jobs across the globe. The report by Goldman Sachs economists says the technology is ‘a major advancement with potentially large macroeconomic effects.’ But most jobs, they say, will be complemented by AI rather than replaced entirely.

🛒 Chinese ecommerce titan Alibaba is planning to break itself up. The company says it will split into six business units, some of which may be listed or sold. The announcement seems intended to placate the CCP, which across the last three years has moved aggressively to diminish the power of domestic tech giants.

🏰 Disney has reportedly fired its entire metaverse division. Last year the entertainment giant called the metaverse ‘the next great storytelling frontier’ and announced plans to bring blended digital-physical experiences to its parks. The company has recently been under pressure from investors to cut costs.

⛔️ This just in as the newsletter goes to press; the Italian government has banned ChatGPT citing concerns over data privacy breaches. The Italian Data Protection Authority says the move is temporary and will be revoked ‘when ChatGPT respects privacy’. OpenAI CEO Sam Altman says the company ‘defers to the Italian government’, but believes it has followed all relevant privacy laws.

🌍 Humans of Earth

Key metrics to help you keep track of Project Human.

🙋 Global population: 8,025,029,075

🌊 Earths currently needed: 1.8010517836

💉 Global population vaccinated: 64.4%

🗓️ 2023 progress bar: 25% complete

📖 On this day: On 1 April 1976 Steve Wozniak and Steve Jobs found Apple Computer in California.

Speed Warning

Thanks for reading this week.

The ever-more urgent quest to conform machine intelligence to our values is yet another classic case of new world, same humans.

I’ll keep watching. And there’s one thing you can do to help: share!

Now you’ve reached the end of this week’s instalment, why not forward the email to someone who’d also enjoy it? Or share it across one of your social networks, with a note on why you found it valuable. Remember: the larger and more diverse the NWSH community becomes, the better for all of us.

I’ll be back next week. Until then, be well,

David.

P.S Huge thanks to Nikki Ritmeijer for the illustration at the top of this email. And to Monique van Dusseldorp for additional research and analysis.