Life in the Mirrorworld

LLMs and the rise of simulated human societies

Welcome to this update from New World Same Humans, a newsletter on trends, technology, and society by David Mattin.

If you’re reading this and haven’t yet subscribed, join 26,000+ curious souls on a journey to build a better future 🚀🔮

A few weeks back, my favourite AI researcher dropped a new paper.

It’s called Generative Agent Simulations of 1,000 People. Not a catchy title. But I believe this research will eventually change the world. That’s why I’ve chosen it as the subject of this first in my new series of weekly postcards.

The researcher in question is Joon Sung Park; he’s a PhD student at Stanford University.

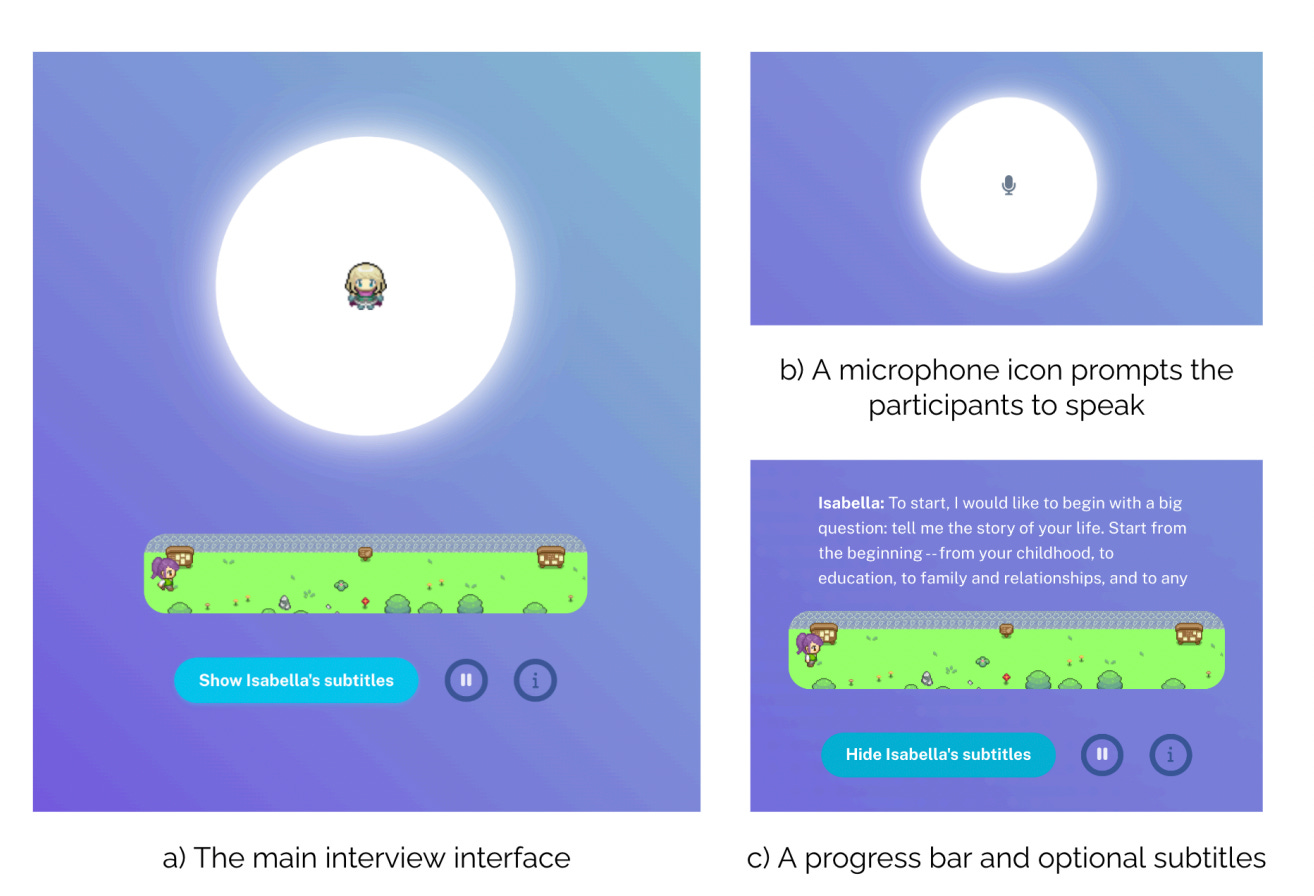

He and his team interviewed 1,052 people. They asked them to speak for two hours about themselves and their everyday lives, beliefs and values.

Park fed that input into a large language model and used it to create 1,052 AI agents, each one based on a specific interviewee.

And then his team saw something amazing. These AI agents do a great job of simulating their human counterparts.

To test this, Park took the synthetic people he’d built and asked them questions from the General Social Survey: an instrument often used to gauge opinions on social and cultural issues. Then he asked his human subjects the same questions. He found that the AI agents predicted the responses of their human equivalents with 85% accuracy.

The implications are huge.

For decades, sociologists have dreamed of building accurate simulations of human societies. One of the first computational social simulations was called Sugarscape. It aimed to model the social dynamics that arise via competition for scarce resources.

Sugarscape and its many successors leveraged a technique called agent-based modelling (ABM). The synthetic people — or agents — inside these simulations would follow a set of simple, hand-coded rules.

But human behaviour isn’t simple. It’s chaotic and unpredictable. It certainly doesn’t follow a set of hard rules. So the social simulations based on ABM weren’t that good.

Now, the work of Park and others shows that LLMs are a powerful answer to this challenge. Inside LLMs, we can build synthetic people that really do mirror human behaviour in all its messy complexity.

It turns out that predictive models of human behaviour are deeply embedded in LLMs — latent in the vast and intricate tapestry of language that lies at their centre. The result is a revolution for social simulations.

So what next?

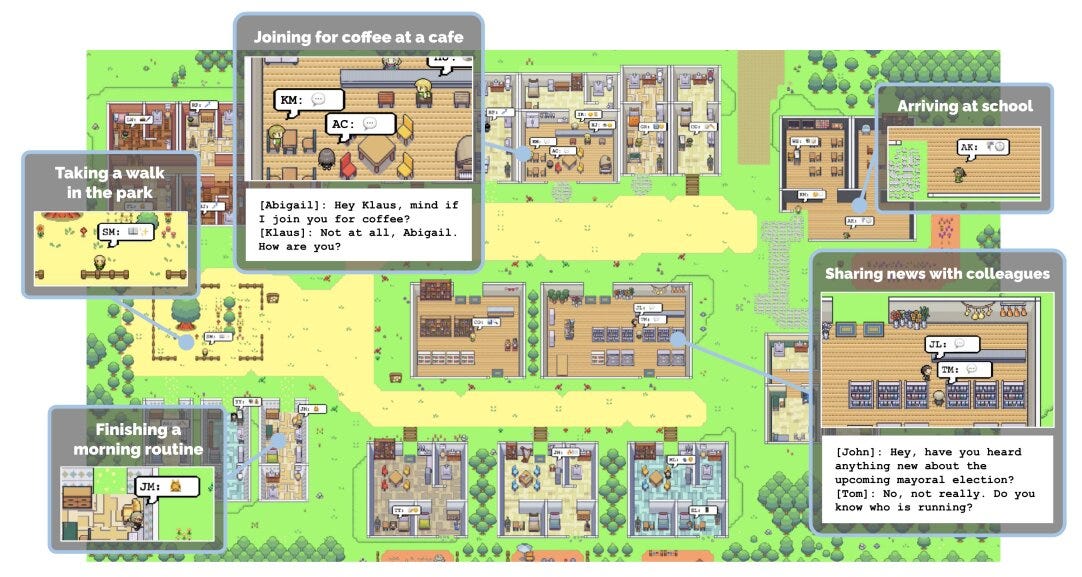

Other work by Park signals the way forward. In 2023, his team created a handful of LLM-fuelled synthetic humans, and set them loose inside a virtual town called Smallville. They asked these AI-people to arrange a Valentine’s Day party. And then sat back as the inhabitants of Smallville started chatting to one another, buying party food, and drawing up invites.

None of the behaviour was pre-programmed. It just emerged.

Smallville was only 20 synthetic people. But imagine a simulated nation of millions. Via exponential increases in compute power, those nations are coming.

I’m convinced that the next technology megaplatform will be a planetary-scale social simulation. One that contains billions of virtual people, each living a complex life inside a rich environment, and each interacting with many others.

Corporations, governments, scientists — everyone will want access to this mirrorworld. Soon enough, it will be a reality all of its own.

I’ll keep watching. And keep sending you these Postcards from the New World.

Be well,

David.

This was #1 in the series Postcards from the New World, from NWSH.

Good stuff

Harbinger of the death of Market Research as we know it