AI as electricity, AI as magic

The message hidden inside DeepSeek's R1

Welcome to this update from New World Same Humans, a newsletter on trends, technology, and society by David Mattin.

If you’re reading this and haven’t yet subscribed, join 28,000+ curious souls on a journey to build a better future 🚀🔮

In AI, there are decades where nothing happens, and weeks where decades happen. Recently we’ve grown accustomed to the latter.

DeepSeek’s new R1 reasoning model dominated tech news this week. The Chinese startup say they found huge efficiency gains that allowed them to train R1 for far less compute, and at an accordingly lower cost — just $5.5 million — than was used for comparable models. Its performance rivals that of OpenAI’s o1. What’s more, DeepSeek have open sourced R1, making it free to use and build on.

This sent a shockwave through Silicon Valley, and panicked the markets. Big Tech is spending tens of billions of dollars on AI chips, because we all thought they’d be needed to train the next generation of models. R1 throws that into doubt. Who will buy all those Nvidia chips now? And what happens to OpenAI if startups can create comparable models for a few million?

There’s some doubt about DeepSeek’s claims on costs. I suspect we’ll discover more.

Still, R1 is telling us something useful. And that something is captured by a framework that has long been at the heart of my thinking. It is AI as electricity, AI as magic.

This framework is an attempt to capture something essential about the way AI will manifest in the years ahead. To capture, that is, AI’s strange dual nature.

First, take AI as electricity. One core characteristic of the Exponential Age is its deep merging of information and physical reality. That’s happening via a plethora of sensors and microchips, which are being distributed across the built environment. AI will do useful work the information all those sensors and microchips generate, and so help fuel a coming army of robots, autonomous vehicles, and connected objects.

Intelligence will be abundant, and woven through everything. It will be something akin to a new form of energy.

On the other hand, there is AI as magic. Intelligence will create amazing stories, images, and films. It will automate complex lifestyle tasks. It will do the kinds of cognitive and creative work we once believed only humans would ever be able to do.

This is AI as magic. Look, flamingos!

That was AI magic from Google’s new Veo 2 video model.

One implication of this framework? The AI as electricity business will be hard. Much of the value will be pushed up the chain to AI as magic.

AI as electricity imagines a world in which intelligence is ubiquitous. In other words, in which it is a commodity. A world in which there are a plethora of ‘good enough’ LLMs, many of them open-source. As with any commodity business, the result for suppliers will be a race to the bottom on price.

We were already able to see that world emerging. Look at Meta’s open-source Llama models and others. It was already hard to see how OpenAI could build a huge business simply by selling access to great LLMs. DeepSeek — and the kind of intelligence abundance it points towards — only makes that more difficult.

Instead, and contrary to the AI narrative across the last few years, much of the money will be made not by those who create the AI, but by those who use it to deliver magic to end users. The value, in other words, will be at the app layer.

All through this generative AI moment, many have been dismissive about so-called ‘GPT wrappers’. It’s becoming clear they were wrong. Wrappers — that means apps built on top of LLMs — will be where it’s at.

So, who is best positioned to deliver AI magic? The answer is: those with deep wells of user data, which will allow them to craft AI experiences that resonate with people. And those with vast distribution, which allows them to deliver this magic at scale.

Data, human context, and distribution: who has all that? Meta, Google, Microsoft, and Apple, of course.

Via AI magic, the tech giants will reach even further into our lives, and consolidate their huge power. To those who have won, more winning will be given.

This probably isn’t great news. But that’s a postcard for another day.

Meanwhile, when we analyse OpenAI we need to understand their primary underlying motivation. They are scrambling to get beyond the commoditised LLM business, and to get on to the magic side of the ledger. Remember, ChatGPT is itself a clever wrapper around a set of underlying models.

It really worked as a first stab at magic. I wonder what else they have up their sleeve.

I’ll be back next week. Until then, be well,

David.

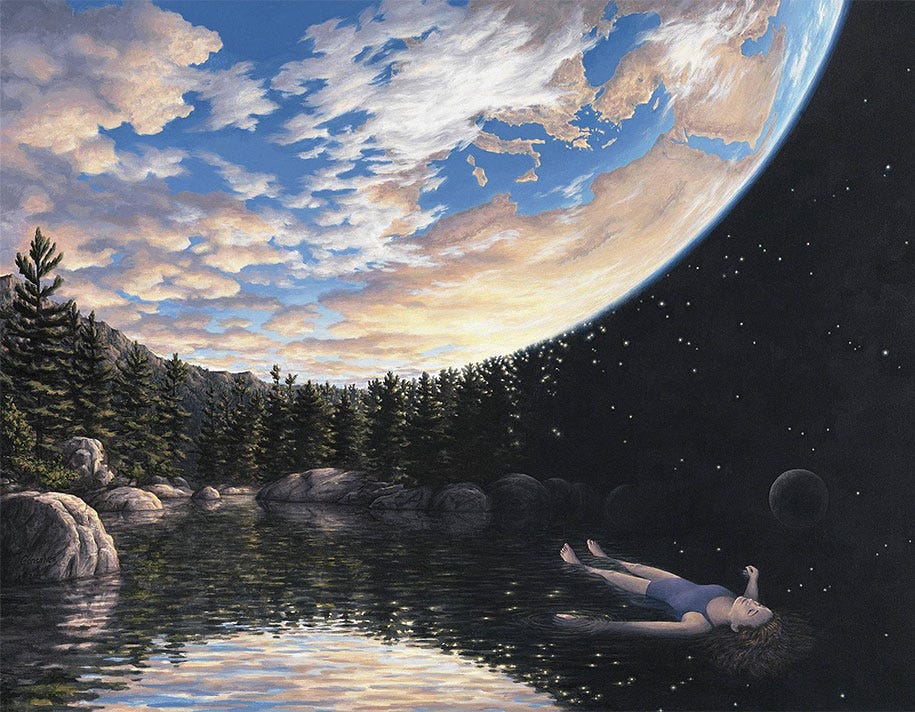

This was #6 in the series Postcards from the New World, from NWSH. The title artwork is The Phenomenon of Floating by the Canadian surrealist artist Rob Gonsalves.

So VEO misidentified Flamingos as Pelicans? Or was it you?

Pelicans? Are you being ironic?